🦙 🤖 Experimenting with LLaMA

This week, I got access to Meta’s LLaMA LLM! Now I get to test out all the repos I starred while waiting for this day :)

Namely:

- facebookresearch/llama

- ggerganov/llama.cpp

- antimatter15/alpaca.cpp (now upstreamed to llama.cpp)

- tatsu-lab/stanford_alpaca

- tloen/alpaca-lora

Forms of LLaMA have been run on laptops (including M-series Mac), Chromebooks, and even Raspberry Pis! The only issue is that downloading all models takes north of 250GB, which is more storage than I can spare on my laptop right now unfortuantely.

I only downloaded the 7B and 13B models for now – it seems their fine-tuned versions are very performant anways (see Stanford Alpaca).

I’m interested to see how (or if) LLaMA & Alpaca 7B and 13B models performs locally on my Thinkpad laptop. It’s spec’d with a Intel Core i5 and 16GB RAM, so I expect it to be relatively slow compared to the likes of ChatGPT. It’s still amazing that such a powerful model can run locally in the first place though. Gotta keep perspective.

Setup

LLaMA

Background

The [paper] is very digstible – check it out!

LLaMA was trained on the following dataset (Table 1): |Dataset|Sampling %| |-|-| |CommonCrawl|67.0%| |C4|15.0%| |Github|4.5%| |Wikipedia|4.5%| |Books|4.5%| |ArXiv|2.5%| |StackExchange|2.0%|

Steps

First, I downloaded the 7B and 13B LLaMA models with the download script and approved URL Meta emailed me.

The llama.cpp repo has great docs on how to get set up but it boils down to:

git clone git@github.com:ggerganov/llama.cpp.git

cd llama.cpp

make

To import the LLaMA models, copy the files downloaded by the Meta download script into llama.cpp/models/.

Then convert the model to ggml (see ggerganov/ggml) and quantize the model weights:

python3 -m pip install -r requirements.txt

python3 convert.py models/7B/

./quantize ./models/7B/ggml-model-f16.bin ./models/7B/ggml-model-q4_0.bin 2

To run inference:

./main -m ./models/7B/ggml-model-q4_0.bin -n 128

Remember, LLaMA is akin to GPT-3, so you need to prompt it so that the model can predict the rest.

For a chat interface you may be more familiar with (akin to ChatGPT):

./examples/chat.sh

Alpaca

Background

Stanford Alpaca (see tatsu-lab/stanford_alpaca) is a fine-tuned model based on the foundational LLaMA that’s better at instruction-following. If you check out the repo, you’ll see the data and methodology the Stanford lab used to fine-tune the LLaMA model.

However, since Alpaca was release, there have been a lot of interesting developments!

LoRA (Low-Rank Adaptation):

We propose Low-Rank Adaptation, or LoRA, which freezes the pre-trained model weights and injects trainable rank decomposition matrices into each layer of the Transformer architecture, greatly reducing the number of trainable parameters for downstream tasks. [paper]

Alpaca-LoRA (see tloen/alpaca-lora), as the name suggests, uses LoRA to fine-tune LLaMA. The authors claim that training “runs within hours on a single RTX 4090.”

Cleaned Alpaca Dataset

Some smart people combed through the dataset used to train the original Alpaca model and saw room for improvement. They made 🤗/yahma/alpaca-cleaned, which conveniently can be used by Alpaca-LoRA!

Experiments

Using Alpaca 7B via llama.cpp:

./examples/alpaca.sh

🍌 Exp 1: Bananas

❓ What are the nutritional facts of a banana

💭 A banana is a nutrient-dense fruit, providing vitamins and minerals such as Vitamin C, Potassium, Iron, Copper, Calcium, and Phosphorus. It is also a good source of dietary fiber, containing about 3 grams per medium-sized banana. Additionally, a banana provides about 110 calories and contains about 16.3 grams of total carbohydrates.

❓ Suggest a tasty breakfast I can make that has bananas in it Banana Pancakes: Ingredients: 2 cups of all-purpose flour, 2 teaspoons of baking powder, 1 teaspoon of salt, 1/2 cup of white sugar, 1/2 cup of brown sugar, 1/2 cup of vegetable oil, 2 eggs, 1 teaspoon of vanilla extract, 1/3 cup of chopped walnuts (optional), 1/2 cup of mashed ripe bananas.

💭 Instructions:

- Preheat a non-stick pan over medium-high heat.

- In a large bowl, whisk together the flour, baking powder, salt, white sugar, brown sugar, vegetable oil, eggs, vanilla extract and chopped walnuts (optional).

- Grease the preheated pan with a teaspoon of butter.

- Pour 1/4 cup of the batter onto the preheated pan.

- Cook until golden brown, flip and cook the other side.

- Serve with syrup, powdered sugar and fresh fruit. Enjoy!

❓ Please change this recipe to accomodate for a nut allergy

💭 This recipe can be adapted for a nut allergy by replacing the nuts with another type of nut-free ingredient, such as sunflower seeds, pumpkin seeds, or coconut flakes.

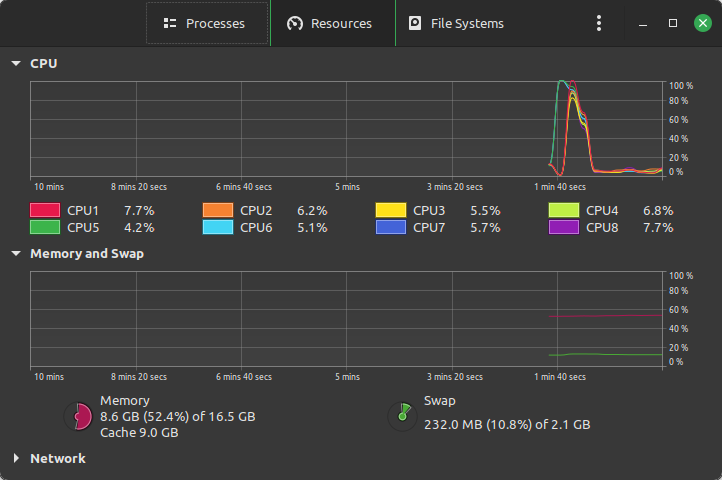

Check out that CPU utilization! Near-100% while the process is running.